In applications that we create for our clients, we often face challenges related to the design and implementation of ETL processes. For example, for systems whose main purpose is to prepare and send Standard Audit File for Tax files (SAF-T), the challenge will be to download sales data from files in a format such as xlsx, csv, txt and even MT940 that contain information about bank transfers. For example, ODS systems will be focused on integrating data from many sources where, in addition to files, source data is stored in databases such as PostgreSQL and MS SQL. Among these many use cases, one requirement is common to all: the solution should be ready for quick and easy addition of new and, most importantly, different data sources that can be transformed and loaded into previously created systems. This is exactly what Pentaho DI gives us, which, thanks to its advanced graphical interface, allows you to create ETL processes with no knowledge of programming languages. The implementation of a simple ETL process will be the main topic of this article, which I hope will inform the reader of the possibilities of this tool.

Pentaho is a Business Intelligence tool that provides the possibility of creating ETL processes, data integration, creating professional dashboards and reporting modules. In 2017, Pentaho became part of Hitachi Vantara, a new company that unites the activities of Pentaho, Hitachi Data Systems and the Hitachi Insight Group. The tool uses the Apache license version 2.0. In this article, we will focus on Pentaho Data Integration (one of the Pentaho modules). PDI, also known as Kettle, consists of a data integration engine and GUI application that allows the user to define ETL processes using jobs and transforms.

Pentaho DI consists of four modules:

In this article, we will use Pentaho Data Integration 8.0, Oracle 12c database and Oracle APEX 18.1.

In this chapter, we will use the specified business case. Suppose we want to calculate the total salary of employees including bonuses. The input data is in a CSV file. The employee’s total salary should be loaded into the EMPLOYEES table.

From the main menu choose File-> New-> Transformation. Choose the following elements from the side menu:

Start building the ETL process. Link the elements in the following order: CSV file input (Extract), Calculator (Transform), Table Output (Load);

Then double-click the CSV file input element. Choose the location of the source file and choose the appropriate delimiter.

In the Calculator, element creates a new field TOTAL_SALARY which is the sum of the field values SALARY and BONUS.

In Table output, define the connection to the target database, the schema and the name of the table.

Choose Run from the top menu. The transform is started. On the screen, we can see the logs of launching our transform.

Pentaho DI, thanks to its graphic interface, allows the quick and easy design of an ETL process. In this chapter, we have shown only some of the functionality of this tool. Now, we’ll focus on running transforms from the Carte server using a solution implemented in Oracle APEX.

Carte is a simple web server that allows for jobs and transformations to be run remotely. In addition, it allows the jobs to be monitored, started and stopped. After downloading Pentaho DI, Carte can be started from the command line via the command:

|

1

|

Carte.bat configuration.xml

|

Where the configuration.xml file can look like this:

|

1

2

3

4

5

6

7

8

9

10

|

<slave_config>

<slaveserver>

<name>carte</name>

<hostname>localhost</hostname>

<port>8088</port>

</slaveserver>

<max_log_lines>10000</max_log_lines>

<max_log_timeout_minutes>1440</max_log_timeout_minutes>

<object_timeout_minutes>1440</object_timeout_minutes>

</slave_config>

|

In addition, it is worth mentioning that Carte provides its own API.

More about Carte can be found on the websites:

APEX Web Source Modules allows developers to access REST services. In addition, it provides the ability to use them in many Oracle APEX components or in a PL / SQL block, to invoke the service and process the responses. So, we can create our own Web Source Module and use it to integrate with Carte. The best part is that this step is quick and simple; it will take us but a moment.

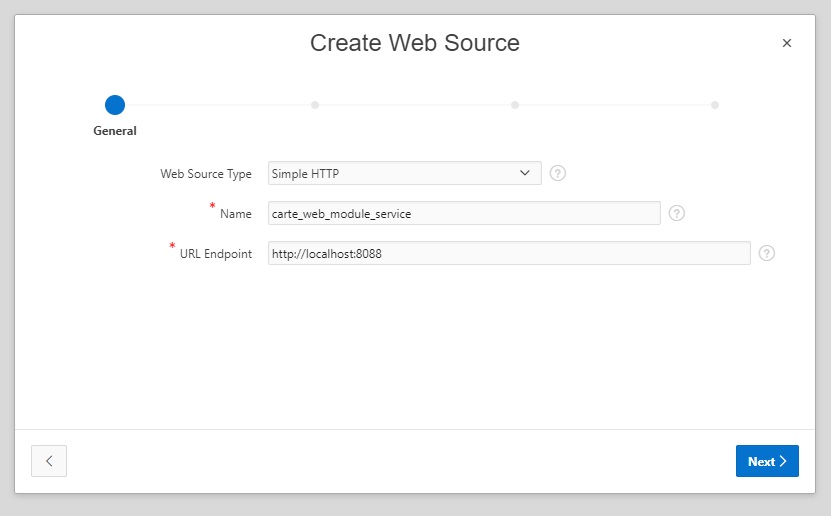

In Shared Components, in the Data Sources section, select the Web Source Modules option and then click the Create button. A wizard window will appear on the screen that will guide us through the entire process. At the beginning we choose the option to create our Web Source Module, in our example it will be ‘From scratch’ and we move on to the next step. Here we specify the Endpoint Name and URL.

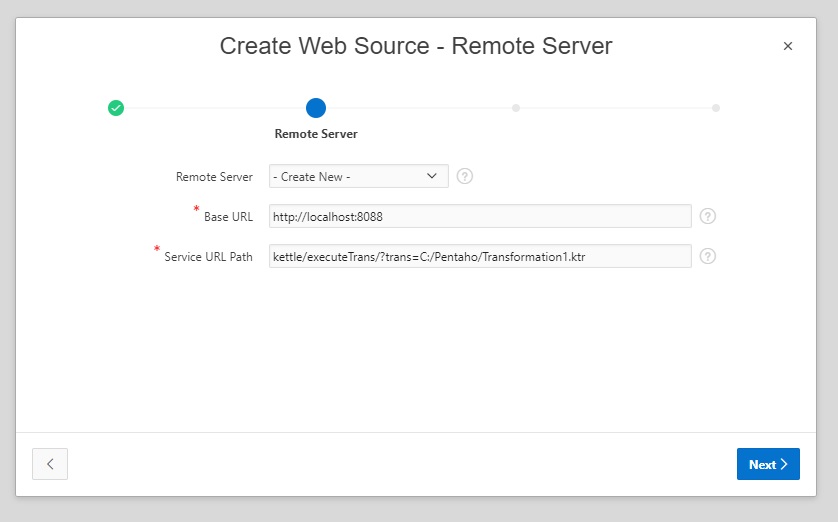

Then we define Base URL and Service URL Path, the address of the service we are interested in.

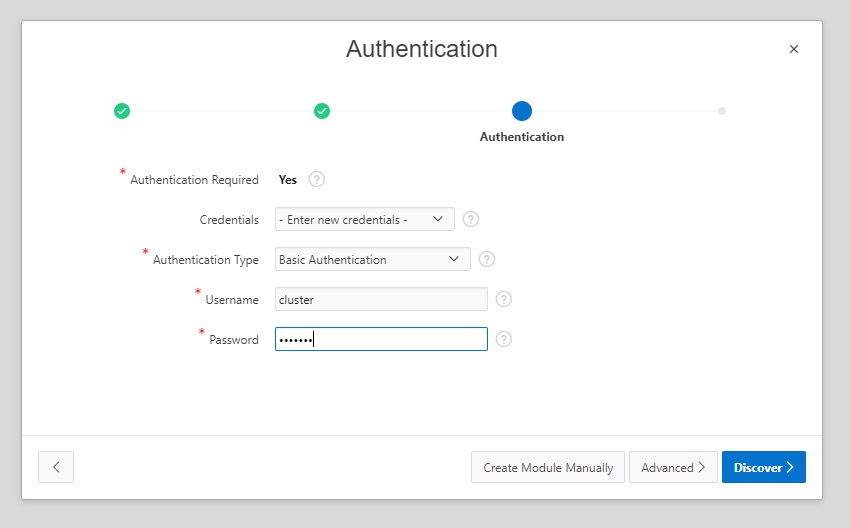

In the last step, we choose the type of authentication. In our example it will be the Basic option, and the login and password will be set as standard values for Carte (more on password management and Carte security can be found on the Pentaho website).

After creating the Web Source Module, we need to edit it. In the Operations section, select POST. In the Advanced section we set the appropriate Static ID value.

We’ve just created our own Web Source Module which we can call using PL / SQL code using the apex_exec package.

|

1

2

3

4

5

6

7

8

|

DECLARE

l_params apex_exec.t_parameters;

BEGIN

apex_exec.execute_web_source(

p_module_static_id => ‘carte_web_module_service_id’,

p_operation => ‘POST’,

p_parameters => l_params);

END;

|

If we do not decide to use Web Source Modules, we can run the transformation on the Carte server directly from PL/SQL code. Just write the PL/SQL procedure responsible for sending the HTTP request and receiving the response. For its implementation we will use the PL/SQL library mentioned in previous articles – utl_http.

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

PROCEDUREp_run_transformation

AS

l_url VARCHAR2(500) := ‘http://localhost:8088/kettle/executeTrans/?trans=C:/Pentaho/Transformation1.ktr’;

l_http_request UTL_HTTP.req;

l_http_response UTL_HTTP.resp;

l_carte_auth VARCHAR2(1000);

l_login VARCHAR2(10) := ‘cluster’;

l_password VARCHAR2(10) := ‘cluster’;

BEGIN

— Authorization

l_carte_auth := ‘Basic ‘||

utl_raw.cast_to_varchar2(

utl_encode.base64_encode(

utl_raw.cast_to_raw(l_login ||‘:’|| l_password)

)

);

— Make a HTTP request and get the response.

l_http_request := UTL_HTTP.begin_request (l_url, ‘POST’, ‘HTTP/1.1’);

UTL_HTTP.set_header(l_http_request, ‘Authorization’, l_carte_auth);

l_http_response := UTL_HTTP.get_response(l_http_request);

UTL_HTTP.end_response(l_http_response);

EXCEPTION

WHEN OTHERS THEN

dbms_output.put_line(‘Procedure name: p_run_transformation. Error occured: ‘ || sqlerrm);

UTL_HTTP.end_response(l_http_response);

END;

|

It is also worth mentioning about Pan and Kitchen. These are command line tools that allow you to run Pentaho transformations and jobs. They are usually used to schedule cron jobs. If we want to use the Pan tool in a PL/SQL block, this can be done using dbms_scheduler according to the following example:

|

1

2

3

4

5

6

7

8

9

|

BEGIN

DBMS_SCHEDULER.create_job(

job_name=> ‘RUN_PENTAHO_TRANSFORMATION’,

job_type=> ‘EXECUTABLE’,

job_action=> ‘C:PentahoPan.bat /file:C:PentahoTransformation1.ktr’,

enabled=> FALSE

);

DBMS_SCHEDULER.run_job(‘RUN_PENTAHO_TRANSFORMATION’, TRUE);

END;

|

After configuring Carte and preparing any of the above integration methods, it was time to create an Oracle APEX application that would allow the user to run transformations.

Sample solution:

The final result:

The first chapter was about the implementation of transformation responsible for extracting data from a CSV file (E), transforming the values of columns (T) and loading data into a table (L), that creating a complete ETL process. Later on, we presented ways to integrate Pentaho DI with the Oracle APEX application using Carte and APEX Web Source Modules, PL/SQL, jobs and the Pan tool. Particularly noteworthy is the first method, which is limited to a few clicks, and its implementation takes literally just a moment. The end result was the creation of a simple Oracle APEX Pentaho Manager application that allows us to run transformations.